Sadece Litres'te okuyun

Kitap dosya olarak indirilemez ancak uygulamamız üzerinden veya online olarak web sitemizden okunabilir.

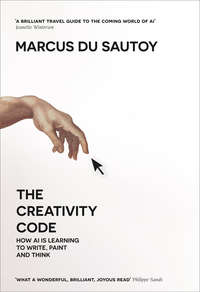

Kitabı oku: «The Creativity Code: How AI is learning to write, paint and think», sayfa 4

Bir şeyler ters gitti, lütfen daha sonra tekrar deneyin

₺1.231,35

Türler ve etiketler

Yaş sınırı:

0+Litres'teki yayın tarihi:

13 eylül 2019Hacim:

400 s. 35 illüstrasyonISBN:

9780008288167Telif hakkı:

HarperCollins